Artificial Intelligence Demystified

A blow-by-blow introduction to Artificial Intelligence.

"Artificial Intelligence" (AI) is quite a popular buzzword of the 21st century that fits into various paradigms of contextual usage. Since the 1950s that the term has become active as a research-based practice and an academic field of study, virtually every sector of the global economy has felt its presence and lasting, progressive impact. Exceptionally, AI's relevance to the advancement of humanity has motivated international organizations such as the UNESCO to lead the call for the inclusion of AI curriculum for schools at K-12 levels globally, to promote AI literacy among children.

It has been observed that people's first encounter with AI tends to trigger connotations as influenced by sci-fi films. For instance, in The Terminator film releases spanning 1984 to 2019 and the 2004 film I Robot, AI machines are represented as robots empowered to develop humans' cognitive abilities. These machines learn the ways of humans and attempt to use manipulative means to take over the world thereafter. To refute doubts and alleviate fears about AI, this article dives into a chain of underlying concepts of AI that attempts to clarify its negative portrayal as a mere figment of imagination.

What is Artificial Intelligence?

There are many popular definitions of artificial intelligence (AI). One of the most relevant descriptions came from the late John McCarthy, notable for coining the term "artificial intelligence" and being one of its earliest developers. In 1956, McCarthy described AI as:

"The Science and Engineering of making intelligent machines".

The definition presents computers as inventions capable of mimicking human behaviour. More like the simulation of human intellect by machines. Such an imitation is observed in self-driving cars, smart assistants, manufacturing robots, and recommender systems to name a few. But how do computers or machines reproduce human actions? How do they decipher and replicate the acts? Most of the answers to these questions are provided by a famous AI concept, Machine Learning (ML).

Machine Learning

"Machine Learning (ML) is the field of study that gives computers the ability to learn without being explicitly programmed. — Arthur Samuel, 1959

As a pioneer of AI research and ML, Arthur Samuel establishes above an easy-to-understand clarification of the significance of ML. His straightforward explanation introduces ML as a branch of knowledge that enable machines develop the capacity for learning without following some rule-based processes. A paradigm commonly used by ML practitioners exemplifies the explication further.

The Paradigm and Applications of rule-based and ML approaches

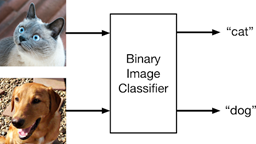

A bid to build a system that can classify animals based on their images can be made easy when the identification capacity of the proposed system is limited to a few animals, for instance, cats and dogs only.

Applying a rule-based programming process, a set of functions inclusive of specificity is necessary for the identification of physical features or body parts unique to each animal. A developer will find this approach cumbersome, for it involves making a long list of rules and parameters applicable to all kinds of dog or cat images. Functions must be able to read image data, locate animal position on images, identify the physical features on animal figures, and use recognized features to make a hypothesis or prediction on the kind of images present. These are rules that capture the complexity of the procedure. They depict a high-level system that demands less redundant code, a strong sense of dynamism and sophistication essential to handle any image data, among other conditions.

On the contrary, a ML approach would make the tasks easy and less sophisticated. Unlike the rule-based approach, a ML system is not guided by a long list of rules. As Arthur Samuel's definition stresses, the ML process involves computers or machines learning by following a due process which requires huge amounts of data that provide an expected response. ML works by feeding data like cat and dog images to a machine learning algorithm. Subsequently, the algorithm uses the data to train a model to understand the features and generate a hypothesis or prediction that is eventually deployed to make predictions on new data input.

A Technical Viewpoint of Machine Learning

Tom Mitchell who is an eminent researcher of ML and pattern recognition deconstructs ML in his 1997 explication from a mainly technical perspective:

"A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E. — Tom Mitchell, 1997"

According to the scientific exposition, in ML, the machine is given a task to perform based on some experience (data), and subject to a measure to validate its performance based on the experience gained. This applies to the earlier ML paradigm of classifying cats and dogs. ML Algorithms are fed data (experience) to learn patterns, extract meaningful features, and make predictions which are evaluated to test its performance on the data.

Branches of Machine Learning

There are three major branches of ML; namely supervised learning, unsupervised learning, and reinforcement learning. They are used to solve real-life problems.

• Supervised Learning

“Supervised learning, also known as supervised machine learning, is a subcategory of machine learning and artificial intelligence. It is defined by its use of labelled datasets to train algorithms to classify data or predict outcomes accurately - IBM 2020”

Supervised learning, as the name implies, requires some form of supervision. A labelled dataset supervises and enables the ML model to learn over time. Considering the previous paradigm, the cat and dog make the labelled dataset for their classification. Supervision learning uses the dataset to train machines with minimal supervision to identify and differentiate the animals appropriately. Some supervised machine learning algorithms include linear regression, logistic regression, Naive Bayes classifier, K-nearest neighbor (KNN), support vector machine (SVM), and neural networks.

• Unsupervised Learning

“Unsupervised Learning is a machine learning technique in which the users do not need to supervise the model. Instead, it allows the model to work on its own to discover patterns and information that was previously undetected. It mainly deals with the unlabelled data. - Daniel Johnson 2022”

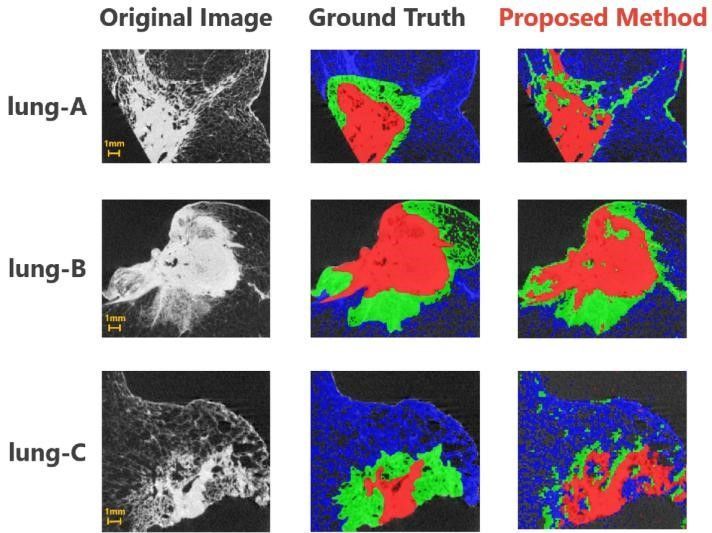

As the self-explanatory definition above indicates, unsupervised learning do not require supervision. This learning mode uses unlabelled or unclassified training data, focusing mainly on the discovery of hidden and interesting patterns in unlabelled data. Its application could be seen in medical imaging for distinguishing between different types of tissues and organs. This works by the clustering or gathering of similar tissues based on their features. Some examples of unsupervised learning algorithms are hierarchical clustering, K-means clustering, principal component analysis, singular value decomposition, and independent component analysis.

• Reinforcement Learning

“Reinforcement learning is a machine learning training method based on rewarding desired behaviors and/or punishing undesired ones. In general, a reinforcement learning agent can perceive and interpret its environment, take actions and learn through trial and error. - Joseph M, Carew 2021”

Based mainly on learning by trial and error, reinforcement learning involves a reward and punishment system which rewards a machine for making the right decision and punishes it for making a wrong decision. A popular application is found in gaming where an AI agent or the game character is trained to function as an autonomous bot. An example was in the popular game of Go. AlphaGo Zero was able to learn the game of Go from scratch. After learning by playing against itself, the game was able to outperform a professional human Go player. Instances of reinforcement learning algorithms are Q-learning, policy iteration, and value iteration.

From the foregoing, we can generalize that ML outperforms the conventional programming method in achieving AI. The rule-based method stands no comparison with ML, for it is characterized by some bulky codebase guided by complex rules that entail a high-level code optimization and management process. ML ensures the feeding of algorithms with data and empower them to recognize patterns from the data. Also, ML enables the adjustment and optimization of the algorithms to generate a model that predicts new data.

Contributions of Deep Learning in Machine Learning

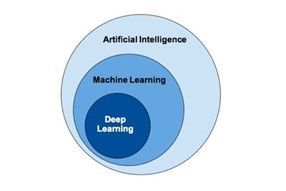

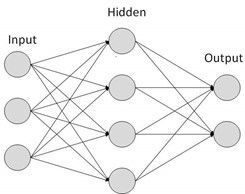

“Deep learning is a subset of machine learning, which is essentially a neural network with three or more layers. These neural networks attempt to simulate the behavior of the human brain—albeit far from matching its ability—allowing it to “learn” from large amounts of data. - IBM 2021”

Deep learning is basically a subset of ML inspired by the structure and functions of the brain, which is the interconnection of many parallel processing elements known as neurons.

Deep learning is pictured as a neural network with three or more layers. Each layer picks out a specific feature to learn. For example, layers pick several features in images, as in image recognition. Deep learning is the key technology behind autonomous vehicles, voice recognition systems, and recommender systems, just to mention a few. Deep learning is attracting attention and yielding positive results that are impossible with the traditional machine learning algorithms.

Conclusion

Artificial intelligence (AI) is impacting every field of life and influencing elementary education in the 21st century impressively. The world feels threatened by fictional, pessimistic depictions of AI. In the face of the fear that AI machines would become future destroyers of humanity, an introduction to basic AI frameworks and workings that prove otherwise is necessary. Basic concepts such as machine learning and its core branches as well as deep learning substantiate that AI machines are mere tools trained to be intelligent with or without supervision. Their workings rule out the possibility of AI inventions becoming more intelligent or more powerful than they are programmed to be and undertaking destructive missions against humanity. Thus, contrary to terrifying science fiction accounts, AI mechanisms are majorly marked for solving humanity's problems.

Author: Nyah Best Anselem

About the Author

Email: bestnyah7@gmail.com

Twitter: @Bee_Nyah_

LinkedIn: Best Nyah